Anyone ever consider what all these brilliant minds attempting to squeeze 'decentralization' out of #Bluesky might be able to accomplish if they unleashed their talents on the #Fediverse? 🤷

Post

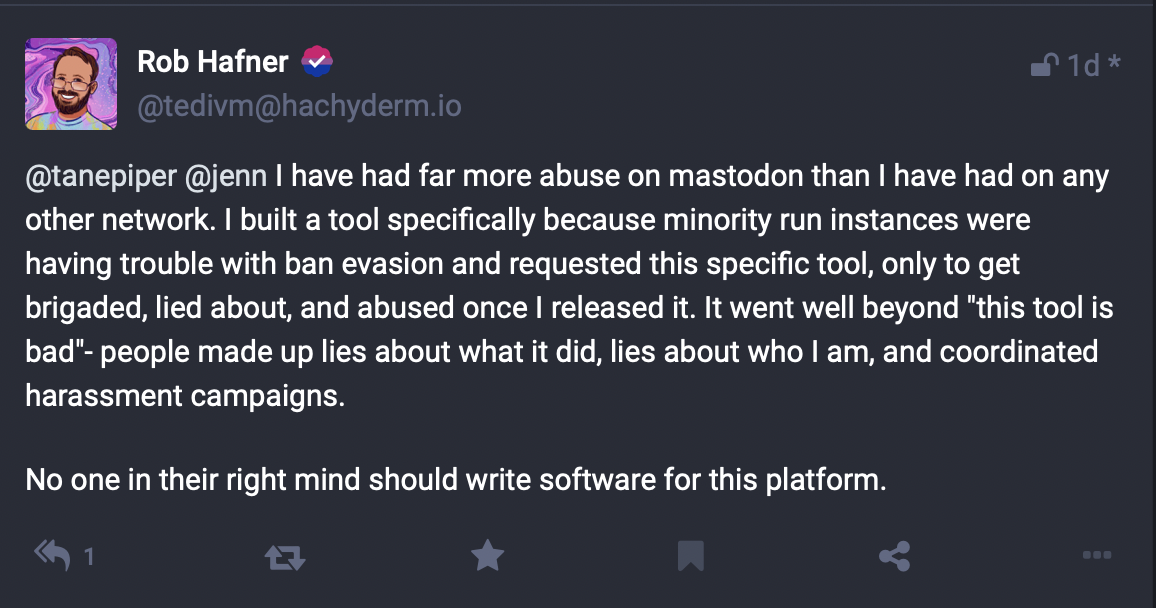

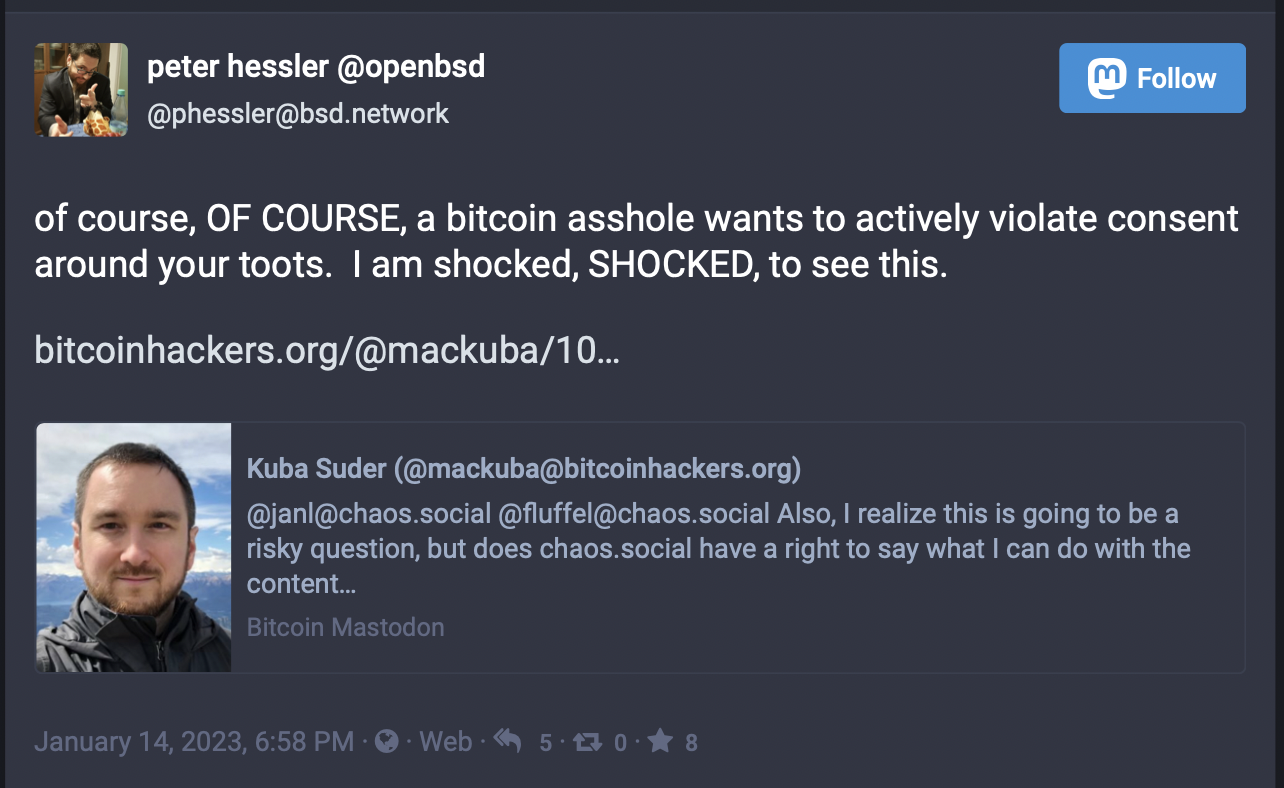

@mastodonmigration Probably something like this:

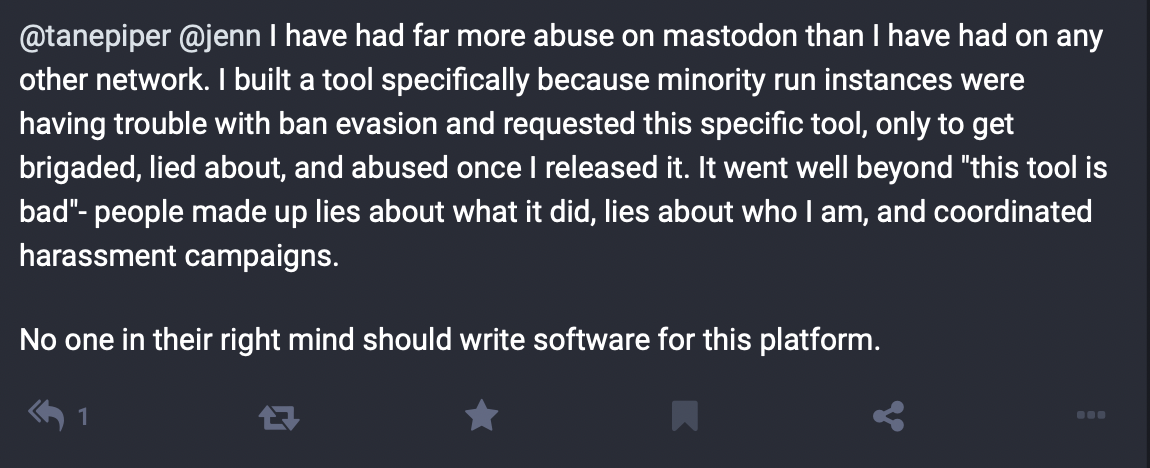

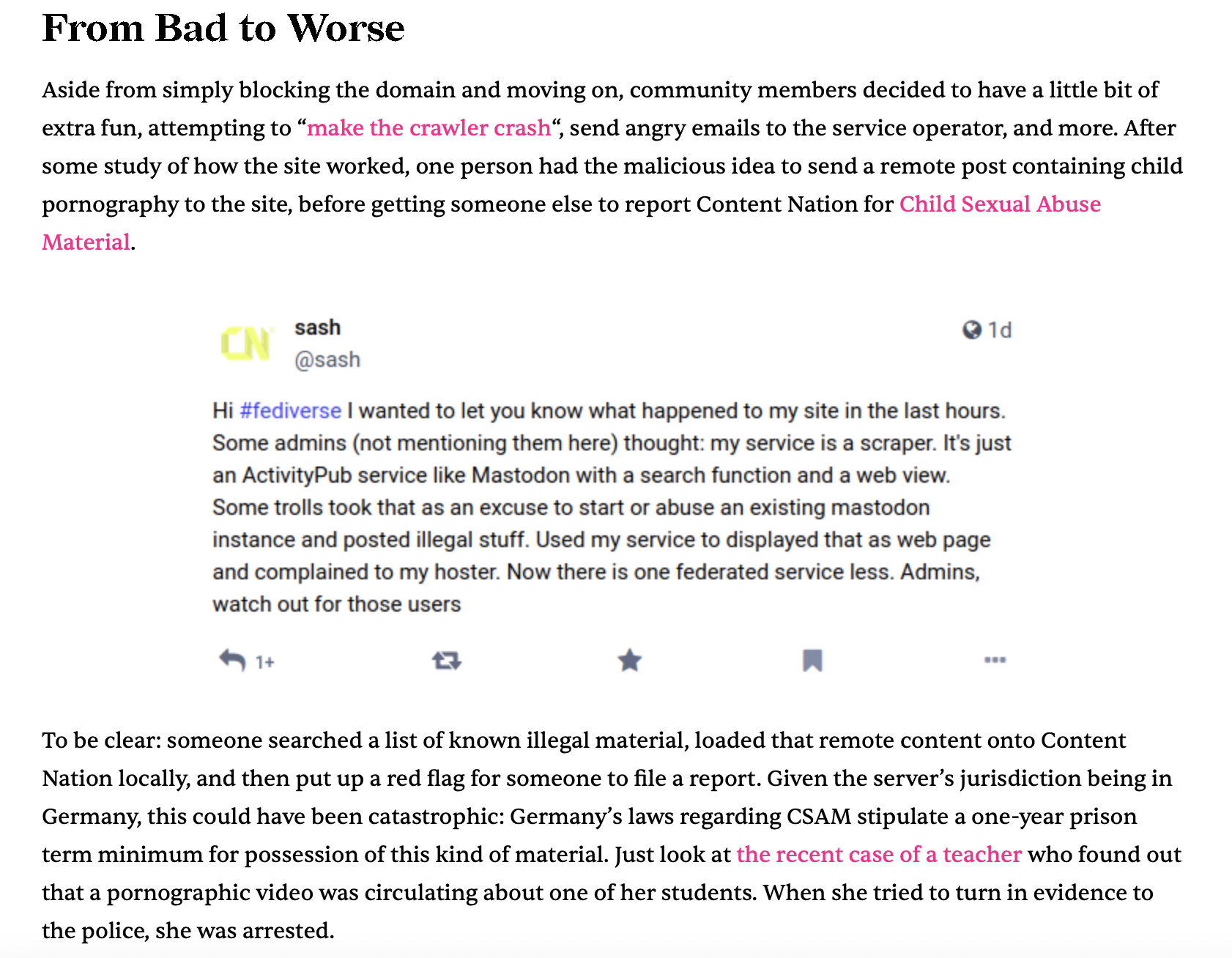

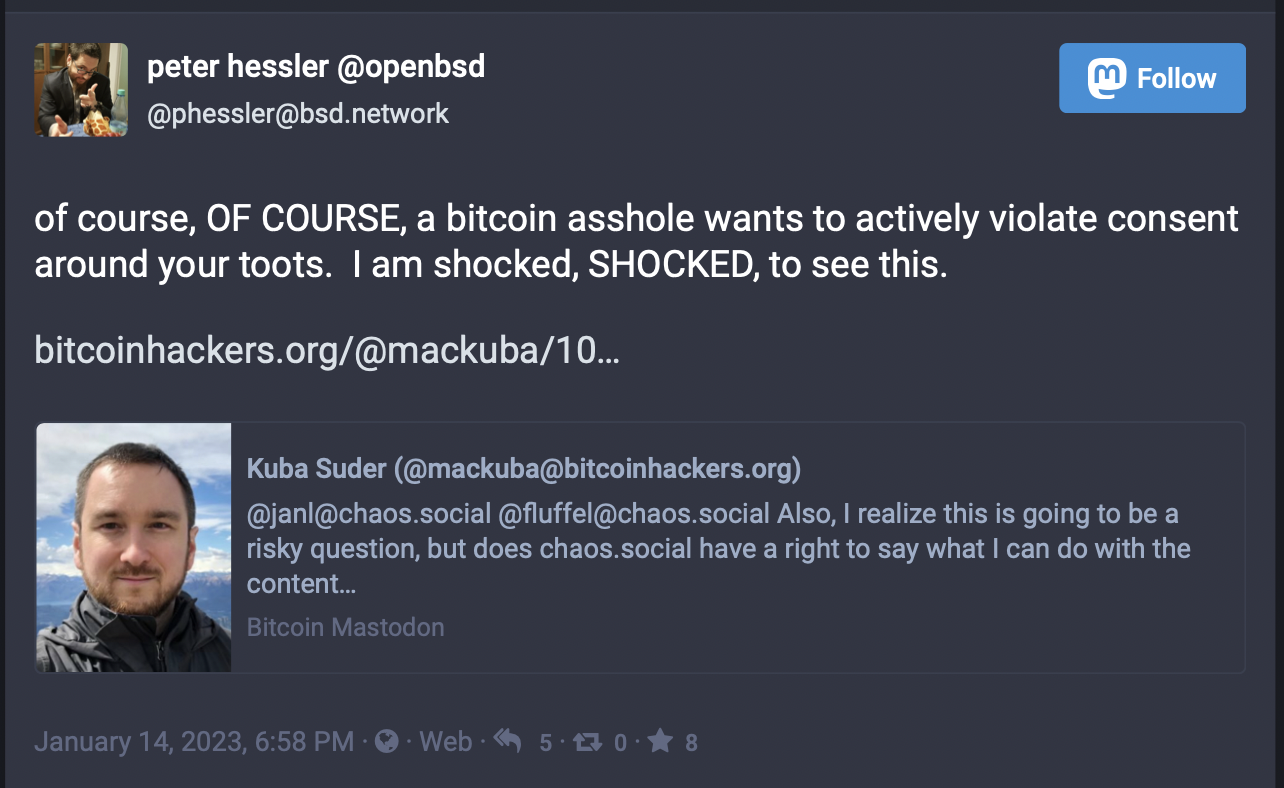

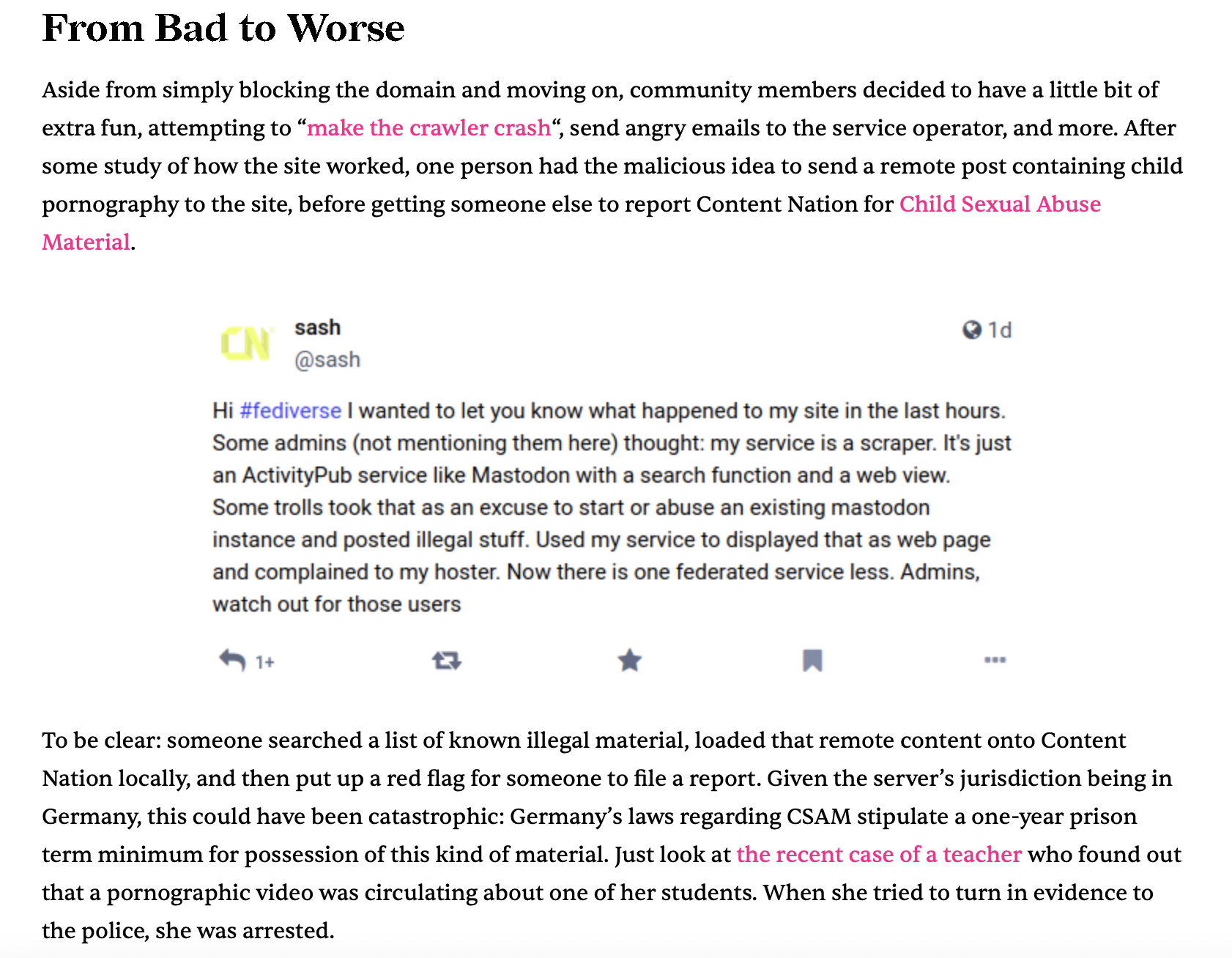

On Bluesky, when you build new tools, you get widely reposted, if you do that on Mastodon, you get widely harassed…

These seems really bad and unkind, but not sure it's correct to make such a broad generalization from a few cherrypicked examples. There are lots of well received and lauded Fedi tools.

But this brings up a specific concern. People criticize Mastodon as if it is a specific way, cast in stone. The entire premise of public social media is it's what people make of it. Rather than running to a corporate silo, why not stay and help build something great addressing these concerns?

@mastodonmigration Probably something like this:

On Bluesky, when you build new tools, you get widely reposted, if you do that on Mastodon, you get widely harassed…

@mastodonmigration It will always baffle me why they keep trying. I don't get it.

Bonfire Dinteg Labs

This is a bonfire demo instance for testing purposes. This is not a production site. There are no backups for now. Data, including profiles may be wiped without notice. No service or other guarantees expressed or implied.